💡 How it works

Modular design

Putting data directly into storage is the easiest way to make information accessible to smart contracts. This approach used to work well for large update intervals and small number of assets. However, there are more and more tokens coming to DeFi and modern derivative protocols require much lower latency boosting the maintenance costs of the simple model.

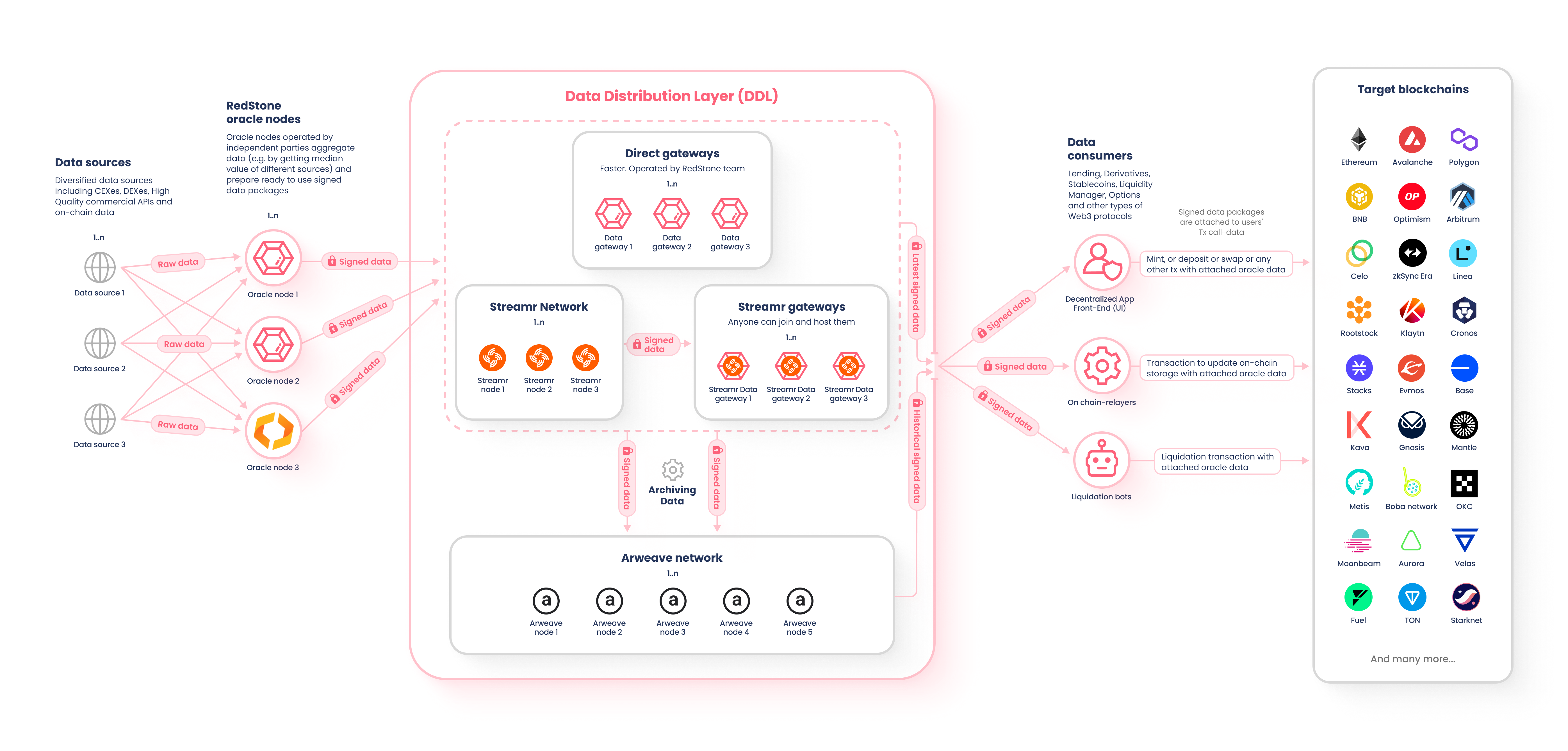

That's why, RedStone proposes a completely new modular design where data is first put into a data availability layer and then fetched on-chain. This allow us to broadcast a large number of assets at high frequency to a cheaper layer and put it on chain only when required by the protocol.

3 Ways to integrate

Depending of the smart contract architecture and business demands we can deliver data using 3 different models:

RedStone Core, data is dynamically injected to user transactions achieving maximum gas efficiency and maintaining a great user experience as the whole process fits into a single transaction

RedStone Classic, data is pushed into on-chain storage via relayer. Dedicated to protocols designed for the traditional Oracles model, that want to have full control of the data source and update conditions.

RedStone X, targeting the needs of the most advanced protocols such as perpetuals, options and derivatives by eliminating the front-running risk providing price feeds at the very next block after users' interactions

Data Flow

The price feeds come from multiple sources such as off-chain DEX'ed (Binance, Coinbase & Kraken, etc.), on-chain DEX'es (Uniswap, Sushiswap, Balancer, etc.) and aggregators (CoinmarketCap, Coingecko, Kaiko). Currently, we've got more than 50 sources integrated.

The data is aggregated in independent nodes operated by data providers using various methodologies (eg. median, TWAP, LWAP) and safety measures like outliers detection. The cleaned and processed data is then signed by node operators underwriting the quality.

The feeds are broadcasted both on the Streamr and directly to open-source gateways which could be easily spun off on demand.

The data could be pushed on-chain either by a dedicated relayer operating under predefined conditions (ie. heartbeat or price deviation), by a bot (ie. performing liquidations), or even by end users interacting with the protocol.

Inside the protocol, the data is unpacked and verified cryptographically checking both the origin and timestamps.

Data Format

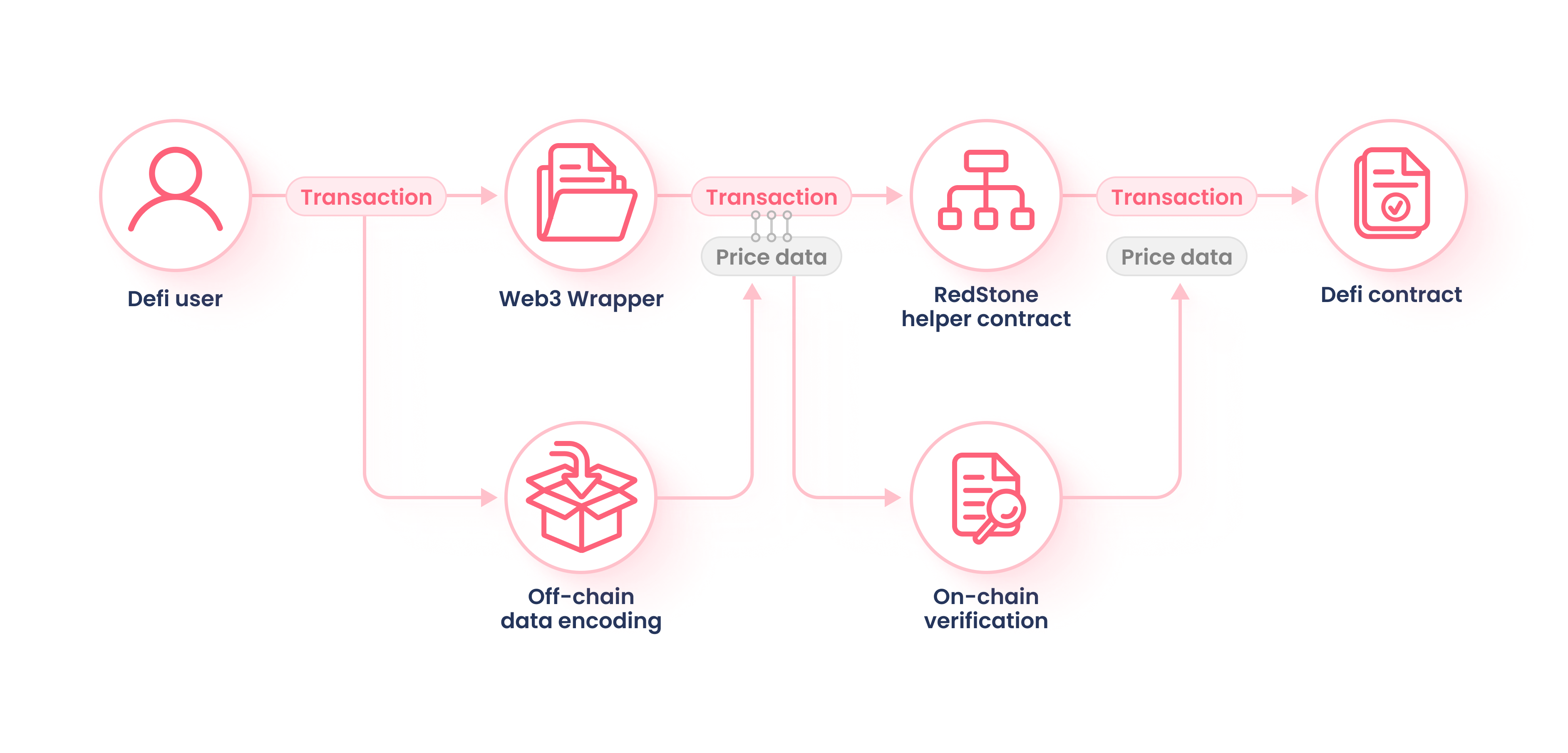

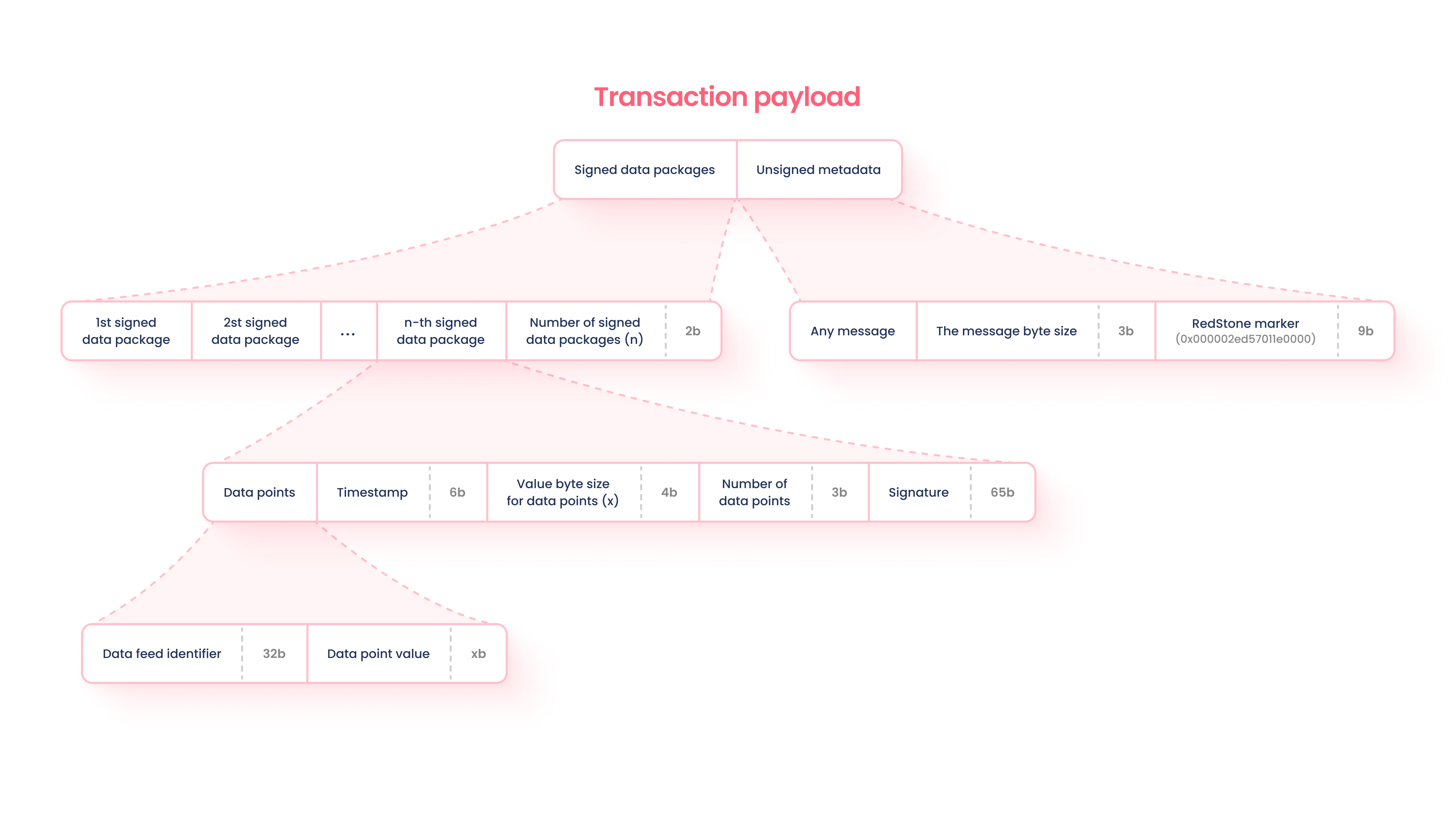

At a top level, transferring data to an EVM environment requires packing an extra payload to a user's transaction and processing the message on-chain.

Data packing (off-chain data encoding)

- Relevant data needs to be fetched from the decentralized cache layer, powered by the streamr network and the RedStone light cache nodes

- Data is packed into a message according to the following structure

- The package is appended to the original transaction message, signed and submitted to the network

All of the steps are executed automatically by the ContractWrapper and transparent to the end-user

Data unpacking (on-chain data verification)

- The appended data packages are extracted from the

msg.data - For each data package we:

- Verify if the signature was created by a trusted provider

- Validate the timestamp, checking if the information is not obsolete

- Then, for each requested data feed we:

- Calculate the number of received unique signers

- Extract value for each unique signer

- Calculate the aggregated value (median by default)

This logic is executed in the on-chain environment and we optimised the execution using a low-level assembly code to reduce gas consumption to the absolute minimum

On-chain aggregation

To increase the security of the RedStone oracle system, we've created the on-chain aggregation mechanism. This mechanism adds an additional requirement of passing at least X signatures from different authorised data providers for a given data feed. The values of different providers are then aggregated before returning to a consumer contract (by default, we use median value calculation for aggregation). This way, even if some small subset of providers corrupt (e.g. 2 of 10), it should not significantly affect the aggregated value.

There are the following on-chain aggregation params in RedStone consumer base contract:

getUniqueSignersThresholdfunctiongetAuthorisedSignerIndexfunctionaggregateValuesfunction (for numeric values)aggregateByteValuesfunction (for bytes arrays)

Types of values

We support 2 types of data to be received in a contract:

- Numeric 256-bit values (used by default)

- Bytes arrays with dynamic size

Security considerations

- Do not override the

getUniqueSignersThresholdfunction, unless you are 100% sure about it - Pay attention to the timestamp validation logic. For some use-cases (e.g. synthetic DEX), you would need to cache the latest values in your contract storage to avoid arbitrage attacks

- Enable a secure upgradability mechanism for your contract (ideally based on multi-sig or DAO)

- Monitor the RedStone data services registry and quickly modify signer authorisation logic in your contracts in case of changes (we will also notify you if you are a paying client)

Recommendations

- Try to design your contracts in a way where you don't need to request many data feeds in the same transaction

- Use ~10 required unique signers for a good balance between security and gas cost efficiency

Benchmarks

You can check the benchmarks script and reports here.

Gas report for 1 unique signer:

{

"1 data feed": {

"attaching to calldata": 1840,

"data extraction and validation": 10782

},

"2 data feeds": {

"attaching to calldata": 3380,

"data extraction and validation": 18657

},

"10 data feeds": {

"attaching to calldata": 15832,

"data extraction and validation": 95539

},

}

Gas report for 10 unique signers:

{

"1 data feed": {

"attaching to calldata": 15796,

"data extraction and validation": 72828

},

"2 data feeds": {

"attaching to calldata": 31256,

"data extraction and validation": 146223

},

"10 data feeds": {

"attaching to calldata": 156148,

"data extraction and validation": 872336

},

"20 data feeds": {

"attaching to calldata": 313340,

"data extraction and validation": 2127313

}

}